🎧 When Code Learns to Listen

The future of building isn’t written — it’s spoken.

From Imagining to Building

Every movement has its mythic moment — that instant when something feels like magic before we understand the mechanics behind it. Vibecoding is having that moment right now.

A founder describes an idea in plain language — “a dashboard for freelancers that tracks invoices automatically” — and within minutes, the screen comes alive. Buttons appear. Data flows. It works.

But beneath that illusion of effortlessness sits an entirely new architecture for how software is made. What looks like a “conversation with an AI” is actually a choreography of interpreters, frameworks, and logic engines — each translating human intent into something a computer can understand, run, and remember.

Vibecoding isn’t about skipping code. It’s about changing the direction of translation.

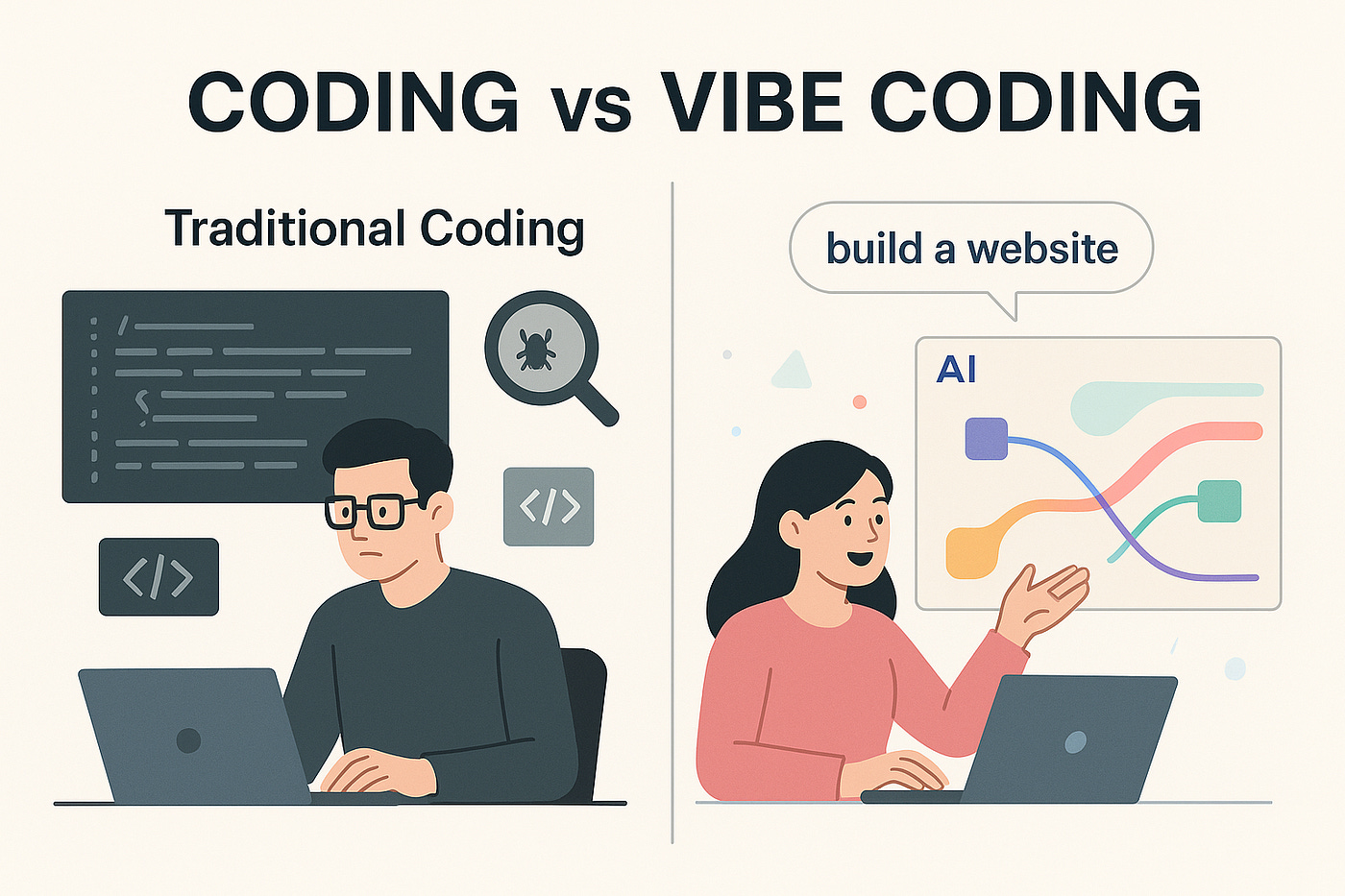

Traditional development starts with syntax — we write instructions for machines. Vibecoding starts with semantics — we describe intentions for systems that can now generate those instructions themselves.

It’s a small shift in language, but a massive shift in leverage. For the first time, describing a product and building one are becoming the same action.

This week, we’ll pull back the curtain on how that actually happens — the five layers that turn vibes into infrastructure. Because the real story of vibecoding isn’t just that it’s faster. It’s that it’s teaching computers how to understand us.

Table of Contents

From Imagining to Building

The Stack of Vibes — How Intent Becomes Infrastructure

Inside the AI — How Large Models Write Code

The Real Art of Vibecoding

The Infrastructure of Trust

The Builders and Their Blueprints — Base44, Lovable, and Solid

The New Discipline — Designing for AI Collaboration

Looking Ahead — From Vibes to Systems

One More Thing

The Stack of Vibes — How Intent Becomes Infrastructure

To understand vibecoding, imagine building a city by describing it to an endlessly patient urban planner. You say, “I need a café on the corner, apartments above, and a park across the street,” and the planner doesn’t just sketch the idea — they start laying bricks before you finish your sentence.

That’s what modern AI-driven builders like Solid, Base44, and Lovable are doing. They translate the messy, creative energy of human intent into precise, structured systems that a computer can actually run. Under the hood, there’s a full architectural process happening — one that mirrors how traditional software is built, just compressed into seconds.

Here’s what that invisible stack looks like:

1. The Translator — Understanding Intent

This is the first act of magic. Large language models listen to your description and begin constructing a mental map: What are the objects, who uses them, and what relationships connect them?

When you say, “make a to-do list,” the system recognises you’ll probably need users, tasks, due dates, and completion states. In developer terms, that’s called a data model — a kind of blueprint that defines what exists inside your app.

At this stage, the AI isn’t writing code yet. It’s identifying the nouns and verbs of your idea — the people, things, and actions that form its grammar.

2. The Architect — Designing the Blueprint

Once intent is clear, the platform designs the skeleton. It decides which frameworks to use — for example, React (for building the user interface) or Node.js (for running the backend logic).

If you think of your app as a building, this is the stage where the walls go up, the rooms are outlined, and the plumbing lines are drawn. In traditional coding, this setup can take days of boilerplate. In vibecoding, it happens automatically: the system scaffolds folders, routes, and files as naturally as a builder reading a blueprint.

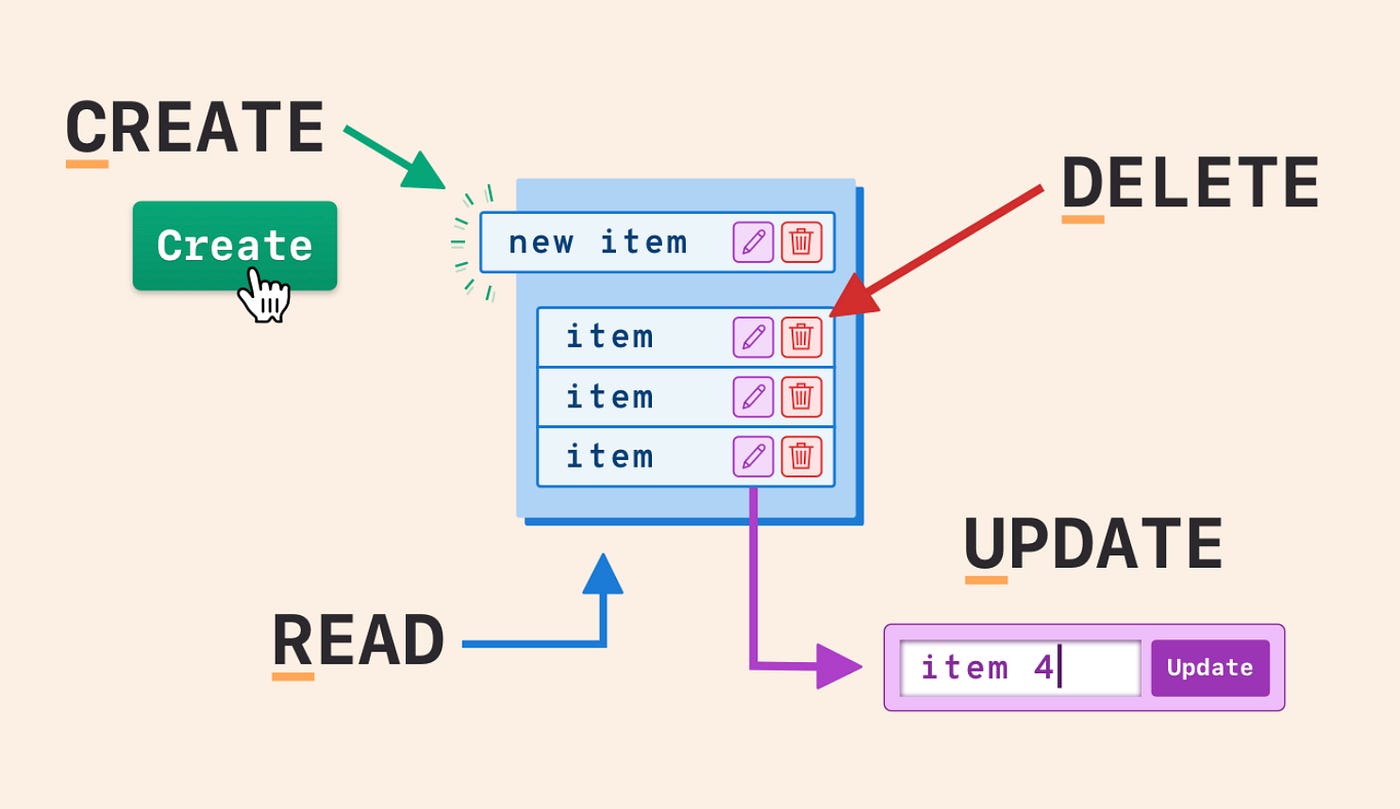

3. The Engineer — Assembling Logic

Here’s where your app starts thinking. The AI connects the moving parts — setting up what developers call CRUD operations, which stands for Create, Read, Update, Delete. These are the basic verbs of any software product: add something, view it, change it, or remove it.

It also wires up API endpoints — the digital doorways through which data travels between your app’s front and back ends. When your browser shows “new task added,” it’s because that doorway just opened, sent a message to the database, and closed again safely.

Most lightweight builders like Base44 or Lovable use prebuilt backend templates to handle this — fast, but closed systems. Solid, by contrast, generates real, open code in Node.js, meaning developers can later edit, extend, or scale it without rebuilding from scratch.

4. The Designer — Shaping the Interface

Now the AI turns structure into experience. It arranges buttons, inputs, and dashboards — often using component libraries like React — and applies styling frameworks like Tailwind.

This stage is less about making things “look pretty” and more about connecting visual elements to underlying logic. When you click a button, it already knows which CRUD operation it triggers and which API endpoint it talks to.

Think of it like a conductor assigning instruments to melodies: each note you hear is backed by an entire section moving in harmony.

5. The Conductor — Deploying and Syncing Everything

Finally, all the layers come together. The app is deployed — meaning hosted online, connected to its database, and kept alive with updates.

In simpler platforms, this happens behind closed doors: your app lives inside someone else’s ecosystem. In Solid, deployment doesn’t end ownership — you get a full GitHub repository, a living codebase you can run anywhere.

It’s the difference between renting studio time and owning your master recordings.

From the outside, it still feels like magic — describe it, and it appears. But beneath that surface, every request you make triggers a symphony of translators, architects, engineers, and designers working in real time.

That’s the hidden genius of vibecoding: it doesn’t erase complexity. It reorganises it — so that human creativity sits at the top of the stack, not buried inside it.

Inside the AI — How Large Models Write Code

If the Stack of Vibes is the orchestra, then the AI is its conductor — interpreting your intention, predicting each note, and deciding how the melody should sound.

But unlike human programmers, large language models (LLMs) don’t reason like engineers. They don’t understand code the way a developer does. Instead, they work more like expert pattern-recognisers — trained on millions of examples of how humans solve problems through text.

When you type, “Build me a booking platform where users can reserve spaces and leave reviews,” the AI isn’t just repeating memorised snippets. It’s recognising a shape — a familiar pattern of logic, data, and flow that thousands of developers have written before. From that, it begins to predict what comes next.

Step 1: Framing the Problem

First, the model dissects your request into smaller intentions. It spots the entities (“users,” “bookings,” “reviews”), understands their relationships, and sketches the outline of a database.

In traditional software design, this is the stage where a developer might draw boxes and arrows on a whiteboard. The AI just does it internally — compressing that diagram into a data schema that can later become real code.

Step 2: Predicting the Patterns

Next, the model starts generating structure. This is where its training matters most. Because it’s read so much code, it knows that when a developer defines a “User” table, it’s usually followed by logic like sign up, log in, edit profile, and delete account.

So the AI doesn’t invent the idea of a user system — it recalls the patterns that make one work. Like a jazz musician improvising over a known chord progression, it predicts the right sequence of moves to stay in tune with your intention.

Step 3: Validating What It Writes

Most vibecoding tools don’t stop at generating text. They test what they’ve written — running syntax checks, verifying dependencies, and catching the kind of typos a human might make at 2 a.m.

This feedback loop is what makes AI-assisted coding powerful: it’s not a single prediction, it’s an iterative conversation. The model proposes code, the system tests it, and together they converge on something that actually runs.

Solid takes this even further. Because it generates full-stack applications in Node.js, React, and PostgreSQL, it can validate not just snippets of code but the entire architecture — backend routes, frontend components, and database queries — ensuring they all work in harmony.

Step 4: Learning From You

Every time you tweak, edit, or re-prompt, the system refines its sense of what you mean. Over time, this becomes a kind of shared memory between human and machine.

Say you write, “Add an admin dashboard,” and later correct it to “Make it read-only for non-admin users.” The model starts to understand your preferences for access control, style, or data structure — even if you never specify them again.

In essence, the AI becomes your silent collaborator: it learns your rhythm, your shorthand, and even your design instincts.

Step 5: Keeping Context Alive

One of the hardest challenges in AI code generation is context. Codebases are big; language models have limited “memory.” They can’t see every file at once.

That’s why serious builders like Solid use techniques like context windowing — selectively feeding only the relevant parts of your project into the model each time it generates new code.

Imagine trying to renovate a single room without looking at the whole blueprint. Context management ensures the AI always knows where the walls are — so your new features don’t accidentally knock down the structure that keeps everything standing.

The Real Art of Vibecoding

What’s happening in all these steps isn’t “magic.” It’s an intelligent collaboration — you describing, the AI predicting, the system validating, and you refining again.

It’s not so different from how humans have always built things — through sketches, feedback, and iteration — just now accelerated by a machine that can write, test, and adjust millions of possibilities in seconds.

In that sense, the AI isn’t replacing developers; it’s replacing friction.

It’s translating creativity into computation — and doing it fast enough that the flow never breaks.

The Infrastructure of Trust

Every great shortcut in technology comes with an invisible cost — usually paid in security, not speed.

When you vibe-code, your app comes alive faster than ever before. It’s thrilling to watch an idea go from words to working product in a single afternoon. But the moment a real user logs in, the game changes. Suddenly, your app isn’t just creative expression — it’s infrastructure.

And infrastructure, no matter how it’s built, runs on trust.

Where the Rules Live

Every app has rules: who can see what, who can edit what, and what stays private. In traditional coding, these rules live deep in the backend — locked behind servers, databases, and authentication layers.

But in many lightweight vibecoding tools, those rules are defined in the interface instead. It’s like putting your security guard inside the shop window — it looks reassuring, but anyone who walks by can see exactly what they’re protecting.

That’s how data leaks happen. When logic for things like “only show this user their own data” is handled in the frontend — in the code that runs on your browser — clever users (or bots) can often peek behind the curtain.

The result? Emails, transaction histories, or private messages left one misconfiguration away from exposure.

Why Solid Treats Security as Architecture

Solid approaches this differently. It doesn’t just generate apps that work; it generates apps that defend themselves.

Instead of pushing all the logic to the client side, Solid enforces permissions where they belong — in the backend and database layer. That means every request a user makes is checked server-side before any data is shared.

Think of it like this:

Frontend validation is a doorman asking for ID.

Backend validation is a locked safe that checks the ID again before opening.

One can be bypassed. The other can’t.

Solid also handles secrets — API keys, tokens, and database credentials — the right way: stored securely on the server, never in the public code. Tools that don’t do this leave what’s essentially a “master key” inside the front door lock.

Logging Without Spying

A less glamorous but equally crucial piece of trust is logging. Every mature system tracks what happens — who logged in, what actions they took, and where things went wrong.

But logging must be done carefully. Store too much information, and you risk exposing private data in your own records. Store too little, and you lose accountability when something breaks.

Solid’s approach is measured: it redacts sensitive identifiers before storing logs and limits what leaves the server. You still get full observability, but without leaving personal data sitting in plain text.

Why Security Defines Maturity

Fast prototypes can cut corners. Production systems can’t.

The moment real users start trusting your app with their names, emails, payments, or messages, the rules change — and vibecoding has to grow up. That’s why tools like Solid feel different: they’re not just “AI app builders,” they’re frameworks that respect the weight of what they’re building.

In the old world, developers wrote code and then added security.

In the new world, vibecoders describe what they want — and the best tools build security in from the first line.

Because in the end, speed is impressive. But trust is what scales.

The Builders and Their Blueprints — Base44, Lovable, and Solid

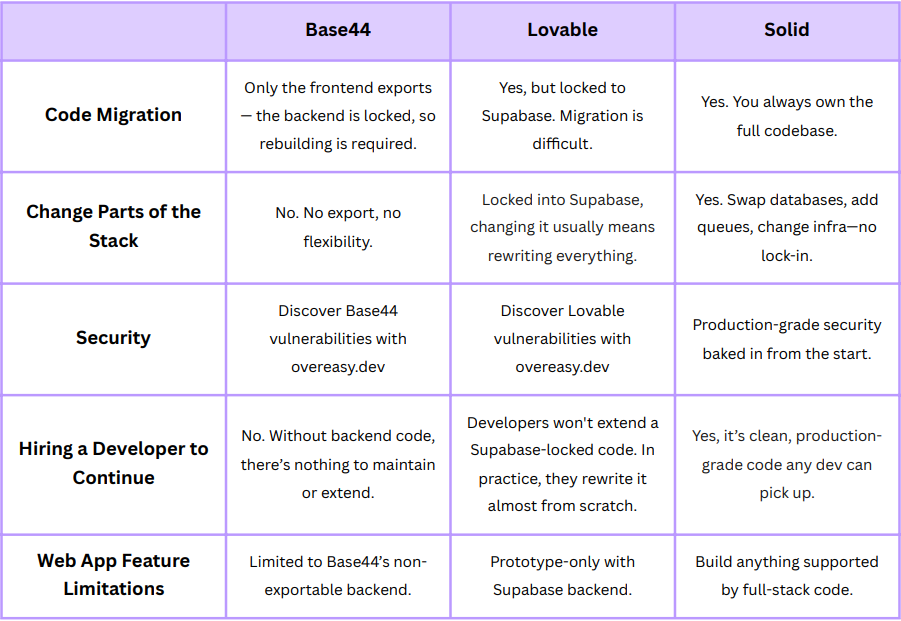

Every vibecoding platform starts with the same promise: describe your idea, and it becomes real. But beneath that shared pitch are very different blueprints — not just technically, but philosophically.

Some tools are designed for accessibility, prioritising instant results. Others aim for longevity, treating every project as a potential business rather than a prototype.

Among today’s leading players, three platforms define the spectrum: Base44, Lovable, and Solid. Each captures a different stage in the evolution of vibecoding — from fast experimentation to full-stack independence.

Base44 — The Beautiful Cage

Base44 is often the first stop for non-technical founders entering the vibecoding space. Its onboarding is sleek, the interface friendly, and the gratification near-instant. In a few clicks, you can go from a blank screen to a working prototype.

But that speed comes with constraints. Base44’s backend — the part that handles your data, permissions, and logic — is prefabricated and closed. You can export the visual layer (the frontend), but not the logic underneath. It’s a bit like designing your dream shop inside a mall: you can decorate however you like, but the lease isn’t yours.

That model works beautifully for validation and proof of concept. The problem appears when projects outgrow the sandbox. Without access to the backend, migrating or scaling means starting over entirely — a frustration that’s become a recurring theme among Base44’s community.

Security audits add another caution. Because backend permissions are abstracted away, data exposure is surprisingly common. In some cases, user emails or transaction data have been accessible through public endpoints. It’s a reminder that convenience and control rarely live in the same room.

Lovable — The Friendly Sandbox

Lovable (also known as Bolt) pushes one step further. Instead of locking users into its own backend, it connects to Supabase — a well-known open-source database and backend system. That makes it feel more flexible: you can export projects, access your data, and, at least in theory, continue development outside the platform.

In practice, though, Supabase dependency introduces its own limits. Because the backend logic runs through Supabase’s “edge functions,” projects become tightly coupled to that infrastructure. Changing databases or adding new backend services often means rewriting major parts of the codebase. Developers familiar with Supabase describe it as “portable on paper, sticky in practice.”

Still, Lovable has found its niche. It’s ideal for founders who want something realer than a demo but lighter than a full engineering build. For early MVPs, community projects, or quick SaaS experiments, it strikes a balance between speed and substance.

Its biggest trade-off, like Base44’s, lies in depth. Supabase simplifies authentication and data access but can create blind spots in security — especially when logic and permissions live partially in client-side code. Security audits have found over half of Lovable apps exposing sensitive user data, usually through public API routes or missing access controls.

Solid — The Open Workshop

Solid represents a different philosophy altogether. Instead of building within constraints, it generates real, exportable codebases using standard frameworks — React, Node.js, PostgreSQL, Docker.

That means the end product isn’t an enclosure but a handoff. The same project you prototype with AI can later be opened by any developer, hosted anywhere, and scaled however you choose.

In effect, Solid treats vibecoding as a front door to proper software development, not a parallel universe. Founders can build the first version conversationally, then bring in engineers to extend it — without rewriting from scratch.

Its flexibility also means fewer architectural dead ends: databases can be swapped, message queues added, or cloud infrastructure changed freely. Developers describe Solid’s output as “production-grade scaffolding” — clean enough to scale, readable enough to maintain, and familiar enough for teams to own fully.

The main trade-off is complexity. Solid expects a bit more technical literacy than Base44 or Lovable. It’s less a toy, more a toolkit. You get speed, but also responsibility — including managing your own infrastructure and version control.

For many teams, that’s a feature, not a flaw.

Different Tools, Different Futures

Each of these builders represents a step in the evolution of vibecoding.

Base44 taught the world that building software could feel effortless — that creativity didn’t have to wait for a developer.

Lovable showed that apps could move beyond static demos — with data, logic, and real user flow — even without writing code.

And Solid is showing what happens next: when the same energy and speed are paired with the kind of codebase, infrastructure, and ownership that real products demand.

The shift isn’t about choosing between them. It’s about where the movement itself is going.

Vibecoding is growing up — from drag-and-drop demos to production-grade systems that can scale, migrate, and endure.

And as that maturity unfolds, the line between idea and implementation keeps getting thinner.

The tools are no longer just interpreting our words — they’re beginning to understand our intentions.

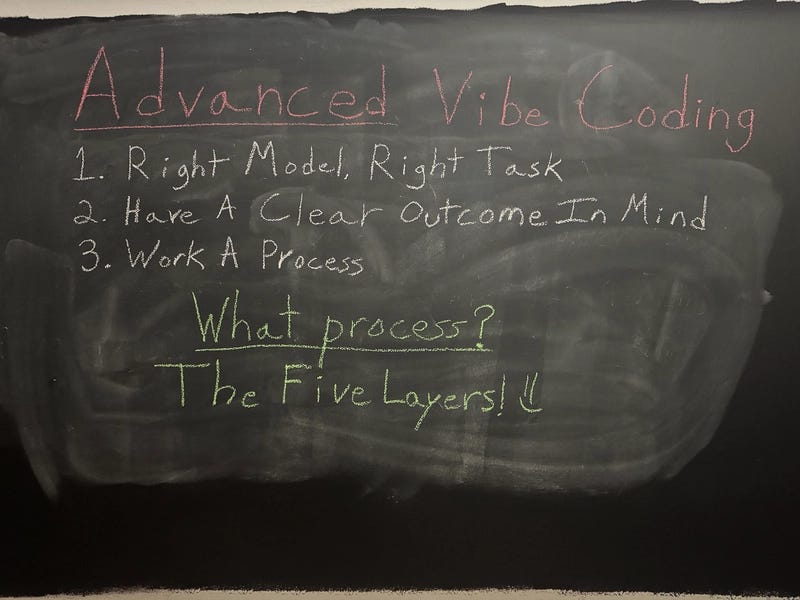

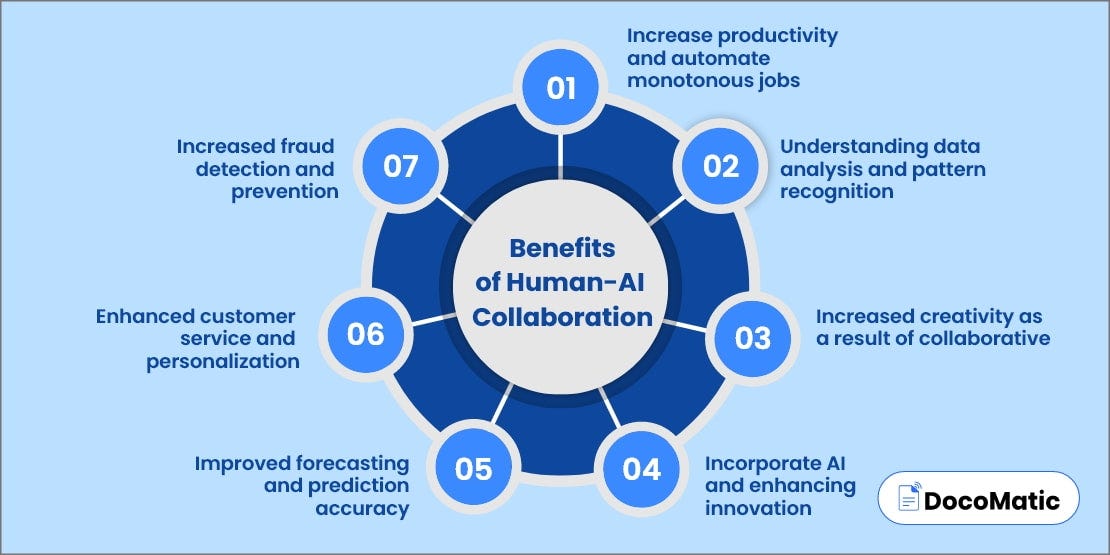

The New Discipline — Designing for AI Collaboration

Every technological leap creates a new kind of craft. The printing press made editors; the camera made directors; the computer made programmers.

Vibecoding is now making something new — a generation of AI collaborators.

We’re entering an era where creation isn’t about writing code, but about shaping conversations. The best vibecoders don’t just know what they want to build — they know how to ask for it.

From Instructions to Intent

Traditional programming is like writing a recipe: every step must be precise, every instruction measurable. Vibecoding flips that. It’s closer to giving creative direction — explaining what you want the final dish to taste like, and trusting the system to work out the ingredients.

That shift demands a new kind of precision: not in syntax, but in clarity.

Vibecoders are learning to describe outcomes, not implementations.

Instead of saying, “write a login function,” they say, “let users sign in with Google and see their own dashboard.” The AI fills in the missing steps, but only if the intention is clear enough to interpret.

The skill isn’t in coding — it’s in communicating complexity simply.

Iteration Becomes Dialogue

In classical development, iteration happens through tickets, feedback cycles, and commits. In vibecoding, it happens in conversation. You describe what to fix, the AI regenerates, and you refine again — often in minutes.

That loop isn’t just efficient; it’s addictive. It mirrors the way designers sketch, writers edit, or musicians improvise — each version feeding the next. The AI doesn’t replace the builder; it accelerates the rhythm of discovery.

Vibecoding at its best feels less like issuing commands and more like coaching intuition into software.

The New Language of Building

As this discipline matures, fluency in AI collaboration will become as valuable as technical literacy once was. Founders who can think structurally — in data models, user flows, and system logic — but express those ideas conversationally will move fastest.

In many ways, vibecoding is teaching builders how to think like engineers without ever opening a manual. Each interaction reveals a little more of how software fits together — how data connects to design, how actions become logic, how structure becomes flow.

This isn’t passive automation; it’s active apprenticeship. The AI isn’t doing the work for you — it’s showing you how creation itself is evolving.

Collaboration as Craft

The real power of vibecoding isn’t that it makes programming easier. It’s that it makes collaboration richer. You’re no longer translating ideas into tickets for someone else to interpret; you’re working directly with the machine, shaping the product as you describe it.

That’s not a loss of creativity — it’s a redefinition of it.

Tomorrow’s great builders won’t be the ones who know the most frameworks or libraries. They’ll be the ones who know how to speak to their tools — who can articulate problems, steer outcomes, and refine direction through dialogue.

Because when you collaborate with an AI that understands intent, your words become architecture.

Looking Ahead — From Vibes to Systems

Every creative revolution starts with intuition and ends with infrastructure.

The first wave of vibecoding was all energy — founders moving fast, sketching ideas, feeling out what was possible. But as the tools evolve, so does the ambition. The next generation of builders doesn’t just want to describe ideas; they want to run companies on them.

That shift changes everything.

Speed alone isn’t enough anymore. The question isn’t “Can I build this?” but “Will it hold up when people start depending on it?”

We’re entering an age where vibecoding stops being a novelty and starts becoming a discipline — one that merges the creativity of design, the logic of engineering, and the language of conversation.

Soon, AI won’t just generate code; it’ll maintain it. Apps will refactor themselves when requirements change. Workflows will adapt automatically to usage patterns. Founders will steer products less like operators and more like conductors — adjusting rhythm, tone, and direction as the system learns alongside them.

And the tools leading that charge are already starting to show what that future looks like.

Platforms like Solid are bridging the gap between imagination and production — turning conversational creativity into real, exportable architecture that can scale, migrate, and mature. They’re proving that vibecoding isn’t just a faster way to build, but a smarter way to begin.

Because the real promise of vibecoding was never about skipping code — it was about shortening the distance between idea and impact.

The next era of builders won’t just be shipping prototypes; they’ll be shipping systems.

And the language they’ll use won’t be syntax — it’ll be intent.

One More Thing

Vibecoding isn’t just changing how we build, it’s changing who gets to.

And Solid? That’s what happens when the vibe grows up, when founders want freedom and foundations.

They’ve given The Founders Corner readers free credits to get started. Click here to get on the ride. If you want yours, just DM Solid on LinkedIn with the email linked to your account and they’ll activate them for you.

So the next time you catch yourself describing an idea out loud, don’t stop there.

Let the vibe build it.

an absolute paradigm shift.

If the AI doesn't just generate the code, but also designs the secure, scalable architecture on a framework a team can later own, then that's not just a new tool; it's a new entry point for entrepreneurs.

Great share, Chris

Super interesting breakdown of how vibecoding is evolving. I’ve been using Replit lately, and it feels like it sits somewhere between Lovable and Solid in this framework