The Question AI Founders Avoid Until It Is Too Late

Why the economics you don’t control end up controlling everything else

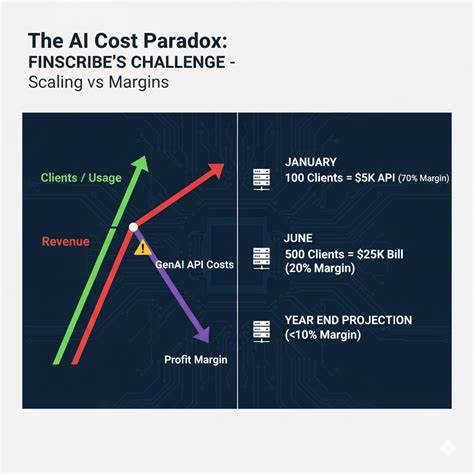

Usage based AI is the new cloud bill shock.

But unlike cloud, this one cuts directly into your core value.

With cloud, you paid for infrastructure you controlled. You could resize it, reserve it, amortise it, or engineer around it.

With AI, you are paying for capability you rent.

And the difference matters more than most founders want to admit.

Because when your costs scale with usage, and usage is the product, growth stops being a clean story. It becomes a negotiation between what customers want, what models demand, and what your margins can survive.

If the variable cost sits inside the value you sell, you do not have a normal software business.

You have a metered margin business pretending to be SaaS.

Brought to you by TheCarCrowd — A Corner of Investing Most People Miss

Most investment newsletters focus on equities, venture, or property.

Few spend time on an asset class that has quietly compounded for decades: collectible cars.

TheCarCrowd sources rare, investment-grade vehicles, fractionalises ownership, and handles everything end-to-end, giving you exposure without needing the expertise, storage, or maintenance.

The tax treatment? Worth a closer look.

👉 Explore the cars currently being evaluated

👉 Understand fractional ownership in under a minute

👉 Add a passion asset alongside a traditional portfolio

Table of Contents

🧾 The Control Illusion, Revenue You Do Not Own

🧮 Why Cheaper Models Do Not Fix the Math

🏃♂️ The Treadmill, Where Margin Improvements Go to Die

🧱 When Someone Else Sets Your Unit Economics

🧠 Founders Wisdom, The One Metric That Does Not Lie

🏛️ The OpenAI Case, Why Founders Draw the Wrong Lesson

💡 Founders OS, Designing for Control, Not Hope

🔚 The Closing Reality, Build Leverage or Build Nothing

🧾 The Control Illusion, Revenue You Do Not Own

Usage growth feels comforting.

Charts go up. Dashboards glow green. Investors nod along as you talk about engagement and retention.

But usage only feels like control when usage and value are decoupled.

In AI products, they usually are not.

Your customer pays you for an outcome, an answer, a workflow, a decision, a piece of work completed.

You pay your provider for the process that produced it.

Tokens. Steps. Calls. Retries. Context.

That gap is where control slips.

When a customer renews because the output helped them, but your costs renew because the model had to think harder, you have inserted a third party directly into your gross margin. And that third party sets prices, changes behaviour, and optimises for themselves, not for your business.

This is why many AI businesses feel profitable until they do not.

Their margins are conditional, not structural.

They depend on today’s pricing, today’s defaults, today’s usage patterns. None of which stay still.

🧮 Why Cheaper Models Do Not Fix the Math

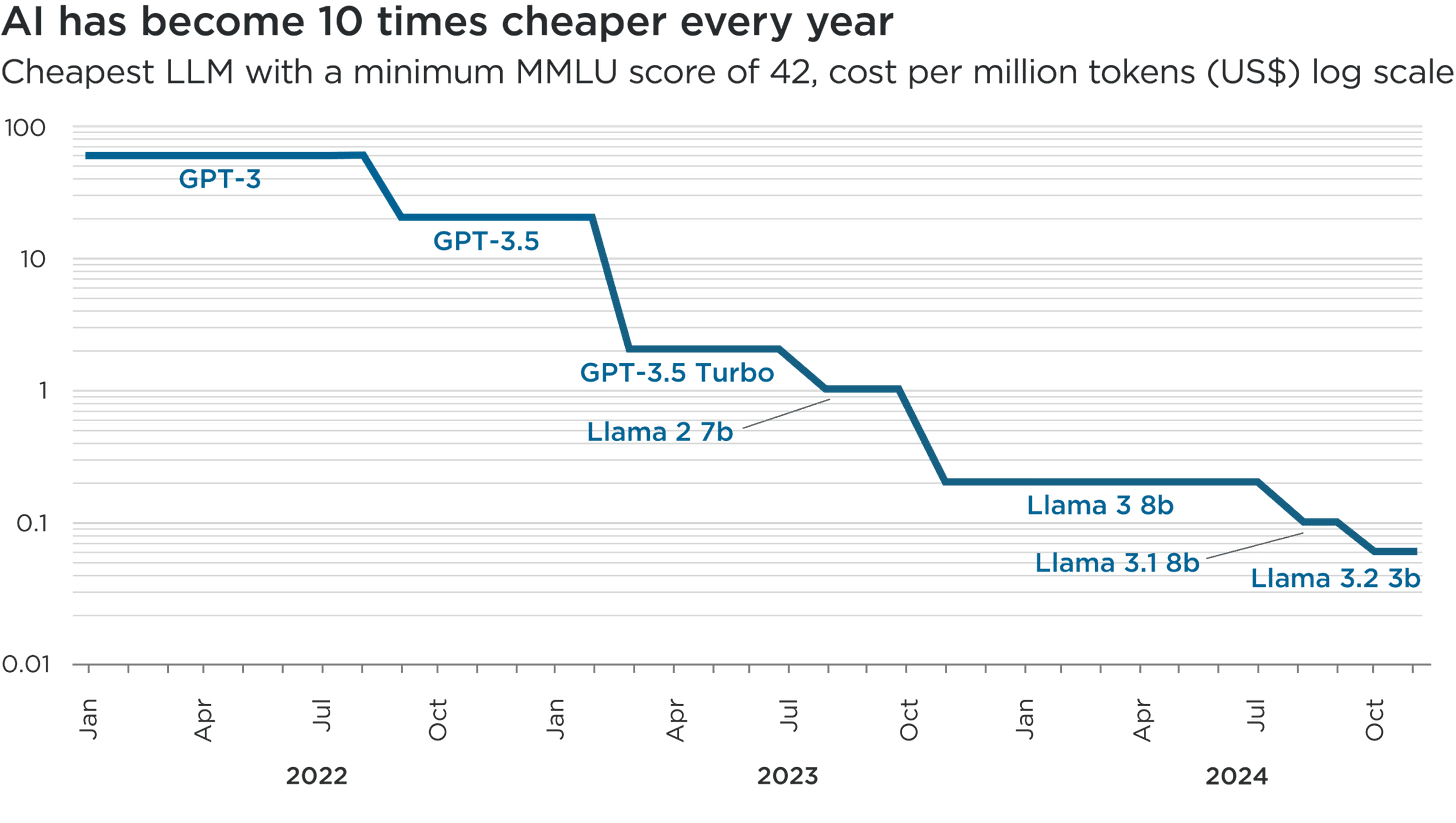

Founders repeat the same reassurance to themselves.

Models are getting cheaper.

Sometimes that is true, in the narrowest possible sense.

Per token costs can fall while cost per customer outcome rises.

Because customers do not buy tokens.

They buy tasks.

And tasks are getting heavier.

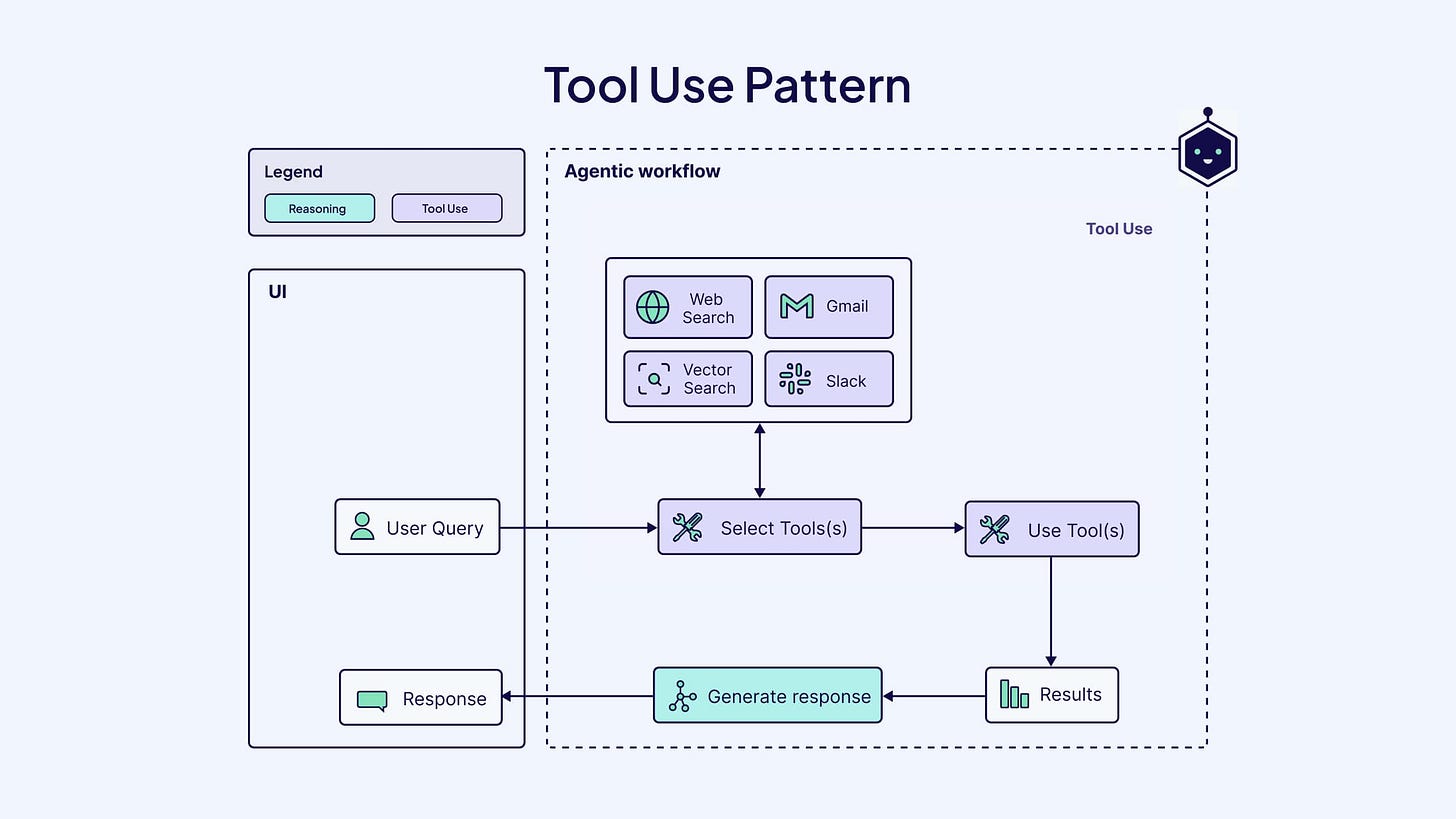

What used to be a single completion is now a chain.

What used to be one response is now planning, tool use, verification, retries, and formatting.

What used to be good enough is now explain your reasoning.

Each improvement feels incremental.

The total cost jump rarely does.

This is the quiet shift most teams miss. Efficiency gains at the model level are competed away at the product level. Lower costs do not widen margins, they raise expectations.

So the benefit shows up as better UX, richer outputs, and more automation, not as retained profit.

If your product improves by consuming more compute, you have not created leverage.

You have just found a nicer way to spend money.

🏃♂️ The Treadmill, Where Margin Improvements Go to Die

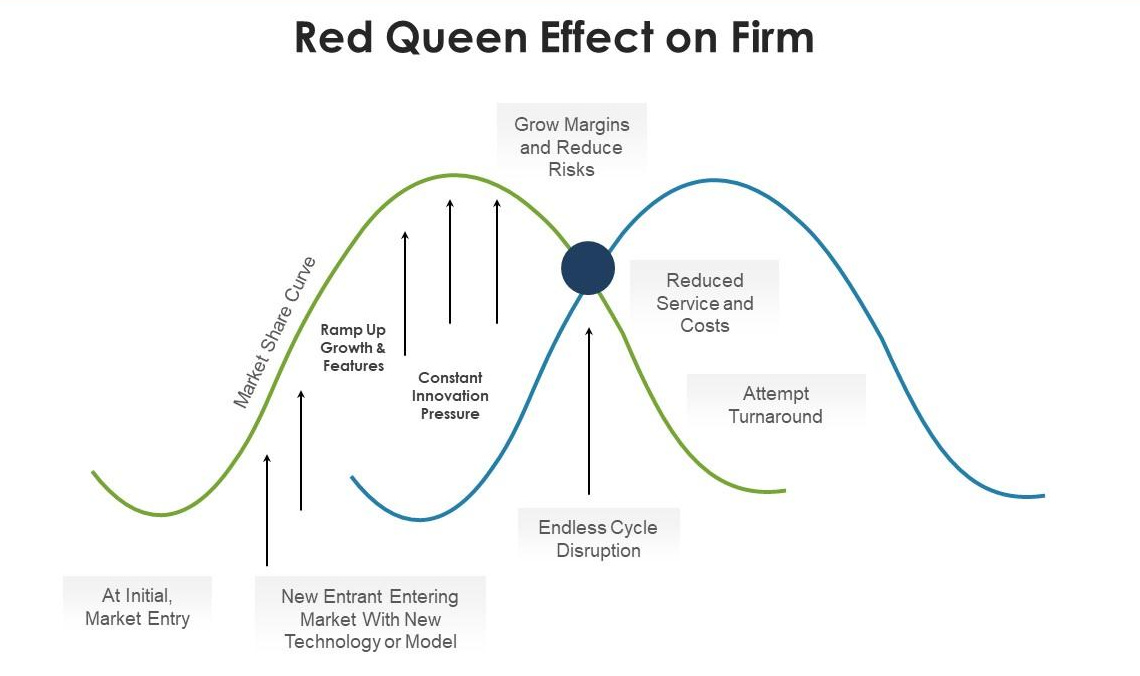

This is why application layer AI companies rarely see lasting margin expansion, even when underlying technology improves.

Any cost advantage you gain becomes table stakes.

If you do not pass it on as better quality, faster response, or broader functionality, someone else will. And customers will punish you for it.

So the win does not accrue to your P and L.

It accrues to the product spec.

That creates a treadmill.

Costs fall, expectations rise.

Expectations rise, workflows get heavier.

Workflows get heavier, costs rise again.

From the outside, the business looks healthy.

From the inside, margin feels permanently fragile.

This is not because AI pricing is bad.

It is because competition converts efficiency into obligation.

🧱 When Someone Else Sets Your Unit Economics

Here is the question that actually matters.

Is your gross margin a property of your business, or of your vendor’s current pricing page?

Most AI founders do not know.

Because their margin depends on things they do not control.

Which model tier customers implicitly demand.

How verbose or cautious the model behaves.

How often workflows fail and retry.

How usage concentrates among power users.

How fast competitors force feature parity.

As customers learn where the product creates value, they naturally push it harder. They automate more. They run edge cases. They expand usage internally.

And usage expands into the most expensive parts of the distribution.

So margins do not just compress suddenly.

They erode quietly.

Which is why usage based pricing is not the enemy.

Unbounded usage based cost is.

If you cannot answer, with confidence.

What a successful workflow costs.

What a failed workflow costs.

Where waste comes from.

How margins behave at three times usage.

Then you do not control your business.

You are just watching it run.

🧠 Founders Wisdom, The One Metric That Does Not Lie

I wrote about this in one of my Braindumps, 6 Critical Efficiency Metrics for SaaS Companies, because founders kept making the same mistake. They treated growth as a cure, when in reality it is a diagnostic.

In that Braindump, I argued that gross profit is not just a financial outcome. It is the clearest signal of whether your system is actually improving as usage increases. When customers push harder on the product, gross profit tells you if the underlying economics are compounding or quietly deteriorating.

This is why I do not get excited by usage charts in isolation. Usage only matters when it improves the quality of profit. If usage grows and gross profit improves, you have built leverage. If usage grows and gross profit stays flat, you are running faster without getting stronger. If usage grows and gross profit declines, growth itself has become the problem.

Usage is not success.

Profitable usage is success.

And when profitability requires discipline, boundaries, and deliberate design choices, that is not friction. That is the business telling you the truth early enough to do something about it.

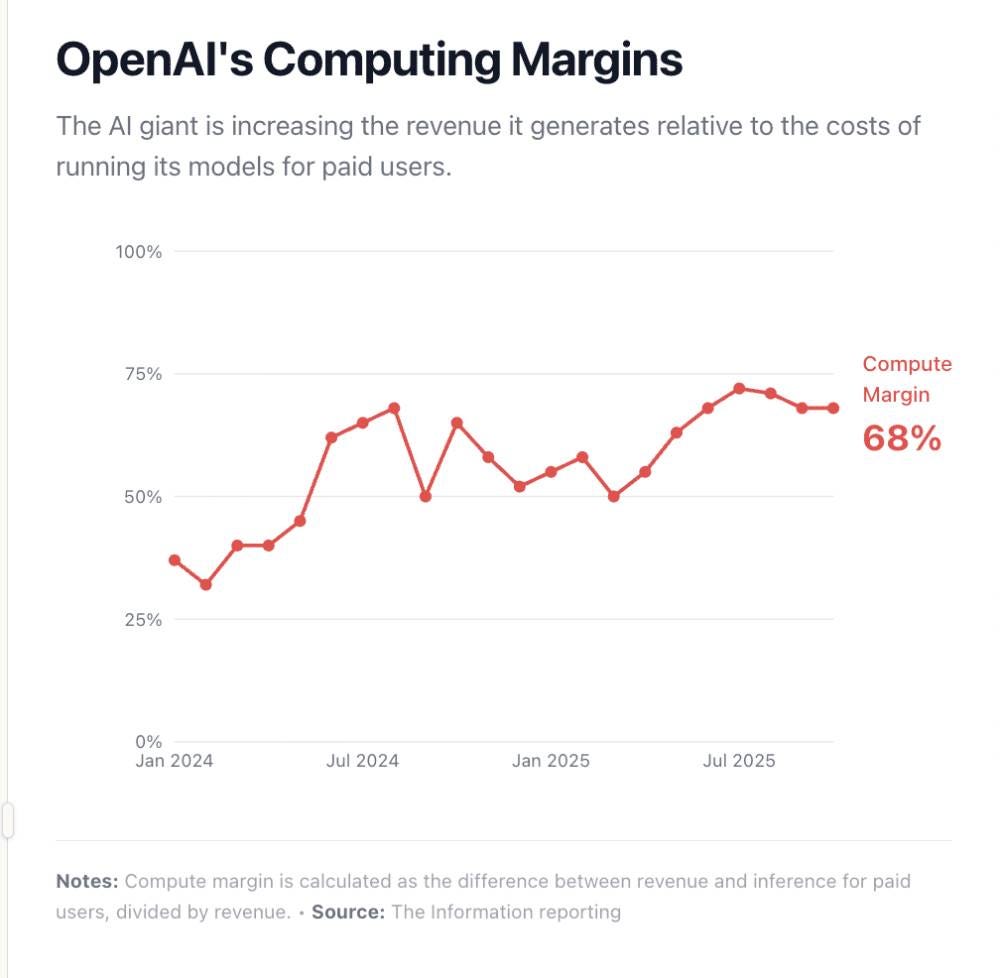

🏛️ The OpenAI Case, Why Founders Draw the Wrong Lesson

The OpenAI margin story is one of the most misunderstood signals in AI right now.

Yes, OpenAI has materially improved its compute margins. That is real. It matters. But it is also specific to where they sit in the stack.

OpenAI captures efficiency gains because they operate at the foundation layer. They benefit from scale, infrastructure optimisation, custom hardware strategies, and pricing power that application companies simply do not have.

For most founders, those gains do not show up as cheaper outcomes. They show up as better models.

And better models immediately reset expectations.

Customers do not pay you less because inference got cheaper upstream. They expect more intelligence, more reliability, more automation, and more capability at roughly the same price.

So when founders point to OpenAI and assume margins will naturally expand over time, they are pointing at the wrong lesson.

Unless you control the model economics yourself, efficiency improvements become an obligation, not leverage.

💡 Founders OS, Designing for Control, Not Hope

This is the work most teams postpone because it does not feel exciting.

It is also the work that decides whether the company compounds or collapses under its own success.

Start by defining your unit of value.

Not tokens. Not calls. Something the customer recognises. A report, a review, a task completed. Measure cost per unit, not per request.

Build routing, not preference.

Cheap models by default. Expensive models only when confidence drops. Retries as an exception, not a loop. Every step should be justified by value created, not elegance.

Add governors inside the product.

Context limits. Summarisation. Caching. Stop conditions. Tiered access. These are not constraints, they are honesty mechanisms that keep value and cost aligned.

Price like costs are real.

Because they are. Fixed pricing with guardrails, outcome based pricing, or two part tariffs all work. Pretending costs do not exist does not.

And finally.

Track contribution margin per workflow.

Gross margin hides volatility. Contribution margin tells you whether growth is making you stronger or just busier.

🔚 The Closing Reality, Build Leverage or Build Nothing

Inference is not dangerous because it is expensive.

It is dangerous because it is seductive.

Every improvement tempts you to spend more compute. Every competitive move raises the bar. Every happy customer pushes harder on the system.

If you do not shape usage deliberately, usage will shape you.

So remember this.

If your AI costs scale with usage, you only control your business if you control the shape of that usage.

Control is not optimism.

It is architecture.

It is pricing.

It is discipline.

Everything else is just growth theatre, and the market is about to get very good at spotting the difference.

Want the full BrainDumps collection?

I’ve compiled all 70+ LinkedIn BrainDumps into The Big Book of BrainDumps. It’s the complete playbook for founders who want repeatable, actionable growth frameworks. Check it out here.

The part about cheaper models not fixing the math is so true!!! We thought GPT-4 → 3.5 was going to save us. Instead, we just shipped five new features that made every workflow 3x heavier. Customers loved it. Our P&L did not.

"Metered margin business pretending to be SaaS" that's going to stick with me. Great read Chris!

The question I keep returning to: at what point does the metered margin reality force a fundamental rethink of AI product pricing models? Are we heading toward outcome-based pricing as the only sustainable structure?